Unlocking the full potential of customer service with ChatGPT

Navigating the challenges and opportunities of large language models (LLMs)

Now that the dust is beginning to settle, we can start having a more educated idea of what ChatGPT is and where it could be used. ChatGPT is the closest form of AI that resembles what we frequently see in science fiction movies and a big jump to what we were used to. It’s a revolution that will fundamentally disrupt many different aspects of our lives, but it’s (very) far from reaching human intelligence and replacing humans. It will not make human labor obsolete, but it will boost productivity and efficiency, if used correctly.

But before we get there, let’s first answer some fundamental questions regarding what ChatGPT is, how it was trained, where it shines and fails while trying to investigate how product teams can use it for automating and streamlining their customer service operations.

ChatGPT and Language Models

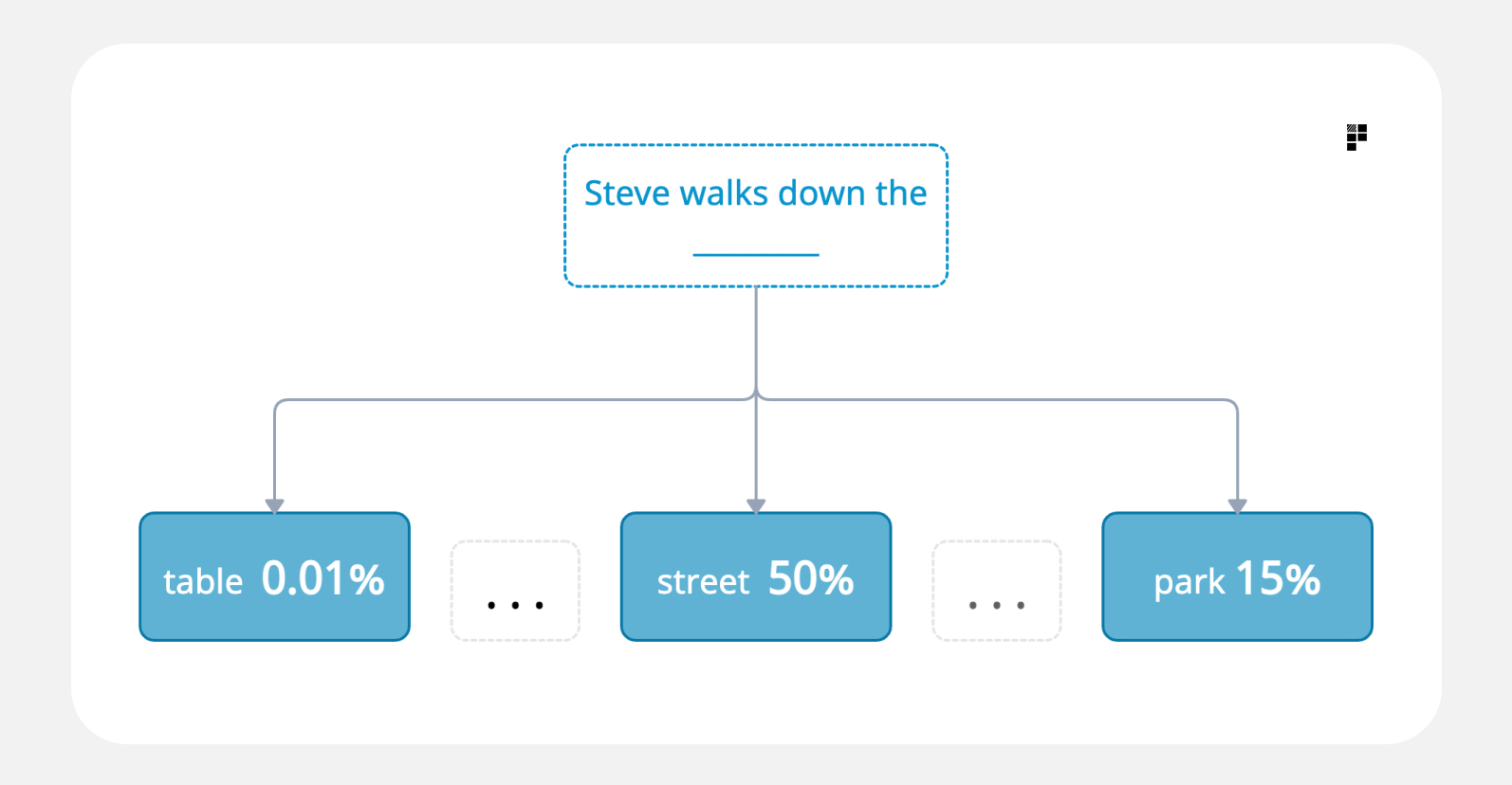

ChatGPT is called a “Language Model” in the field of Natural Language Processing (NLP) and Language Models are not new. They were discovered in the beginning of the century and existed long before ChatGPT. Simply put, Language Models deal with the probability of a certain word to appear in a given context. This means that they try to model the likelihood of a certain word appearing in a given spot in a sentence. Consider this example: “Steve walks down the ___”. A Language Model assigns a probability to every word in the English dictionary to appear in the blank space. For example, the word “street” would get a high probability, e.g 50%, and the word “table” would get a low probability, e.g 0.01%.

Why is this important? If you think about it, this is what ChatGPT does at its core; it generates words by selecting the highest probability depending on the given prompt and conversation history.

Why is this important? If you think about it, this is what ChatGPT does at its core; it generates words by selecting the highest probability depending on the given prompt and conversation history.

ChatGPT is an open-domain chatbot based on GPT-3, a Large Language Model (LLM) that contains 175 billion parameters. GPT-3 was trained over an immense text corpus spanning almost the whole internet. As a result, GPT-3 can generate fluent, grammatically and syntactically correct text that is consistent with the input prompt.

Open Domain vs. Task Oriented Chatbots

An open domain chatbot is a system like ChatGPT, which can participate in a conversation about any topic and remain on point. Note here that “on point” does not imply factual correctness, but relevant answers to the input questions. For example you can ask ChatGPT “how many legs do 4 elephants have?” or “how do I make my food more spicy?” or even “what is the definition of an API?” and get a relevant answer.

Α task oriented chatbot is the one that can perform tasks that are relevant to a specific use case. For example, a task oriented chatbot can book an appointment, cancel a reservation, reschedule a package delivery and give out specific information related to a restricted use case. Task oriented chatbots are usually deployed by product teams that want to automate their customer service operations.

LLMs: the good, the bad and the ugly

As discussed above, ChatGPT and LLMs have already disrupted, and will continue to disrupt, many aspects of our lives, such as how we search the web, write emails, communicate with businesses, and even create content like this blog post!

ChatGPT is an incredibly skilled tool for generating impressive and articulate content that is highly aligned with a given prompt. Especially in an open-domain context, where minimal constraints exist, ChatGPT can create convincing and realistic content that can be indistinguishable from human-generated text. This ability to consistently generate fluent content regardless of the input prompt is what sets ChatGPT apart and has generated much of the excitement around this technology. With the capability to generate content on a wide variety of topics, including biology, technology, sports and fashion, ChatGPT can perform various tasks such as summarization, spell checking, paraphrasing, and even crafting catchy slogan lines. The versatility and precision of ChatGPT make it a valuable resource for anyone seeking to generate high-quality content quickly and efficiently.

While ChatGPT is very skilled at generating content, it lacks the ability to use third party tools in order to perform actions. ChatGPT can, right now, only generate an answer to your prompt but it cannot go further than that. For example, it cannot check a database of facts and come back with a correct response. As a result, it can only provide answers generated by just using information stored in it’s parameters, which were trained in a 2021 Internet snapshot.

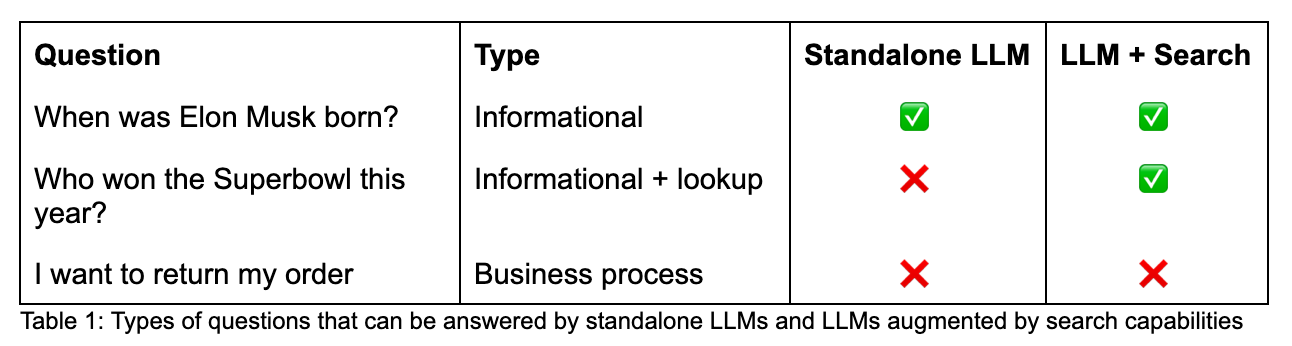

While a standalone LLM is limiting, products like (the new) Bing Search and Google Search will augment the capabilities of LLMs to look up information related to the input query such that they can provide the correct response. Therefore, when you ask “Who won the Superbowl this year?”, these systems will perform an ordinary search over the Internet, and instead of showing you the top website hits, they will use an LLM to synthesize the answer.

By this point, you might be thinking “Great, I can use something similar to Bing Search as my company’s chatbot”. Well, not so fast. Just because these products can use a “search” tool, it doesn’t mean that they can use any tool. Most likely, your customer support needs are more complex than just having an FAQ chatbot providing informational responses to your users’ questions. An FAQ chatbot is limiting, because it can neither provide personalized answers nor perform tasks that can automate business processes.

Your company’s chatbot should be able to call any API to fetch or write user information such that conversations are handled end-to-end. Moreover, most business processes are rigid and governed by explicit rules. By definition, LLMs are probabilistic and there is no guarantee that they can follow explicitly defined rules. Thus, an LLM will not be able to always follow a business process and might end up making multiple mistakes, which can be very costly for a business.

Table 1: Types of questions that can be answered by standalone LLMs and LLMs augmented by search capabilities

Let’s look at an example of a simple business process, for which a chatbot would require the use of an API and the execution of rigid rules in order to automate it end-to-end. Assume you are an e-shop trying to create a chatbot that automates the process of a user trying to return an order. For the sake of simplicity, let’s assume that the process is defined like this:

Α customer wants to return an order and get a refund

- Ask for the order id (verify it’s 8 digits)

- Call the Orders API to get the order details

- If the order was made more than 5 days ago or contains food items, tell the user that the order is not returnable

- Otherwise call the Orders API to create a return entry and give all the necessary steps to the user to perform the return

ChatGPT or any other LLM would not be able to automate this (simple) business flow end-to-end. Hence, when used out-of-the-box, these models cannot offer a complete solution to most customer service needs that a company might have.

The inability of LLMs to use third party tools and follow rigid instructions is not the only limiting factor when trying to use them as task-oriented chatbots. LLMs suffer from the problem of “hallucination”, during which they generate answers that are not consistent with the provided company data. ChatGPT is optimized for eloquent text generation and not for providing factually correct information. This is a significant distinction that limits the applicability of LLMs in many production settings.

How to harness the power of LLMs for customer service

As mentioned, out-of-the-box LLMs cannot be used as a company’s chatbot. However they can be used in conjunction with task oriented chatbots in order to combine the best of the both worlds; rigid processes with human-like dialog quality.

LLMs can assist at creating a task oriented chatbot

Creating a task-oriented chatbot varies in difficulty depending on the conversational AI platform used, but is generally a time consuming process as a company must explicitly map out their use cases while also getting acquainted with platform specific concepts. Creating an AI-powered company chatbot can cost between $10,000 and $150,000 depending on the complexity and business requirements. A big chunk of the upfront investment comes from the number of full time employees dedicated to building and maintaining the chatbot.

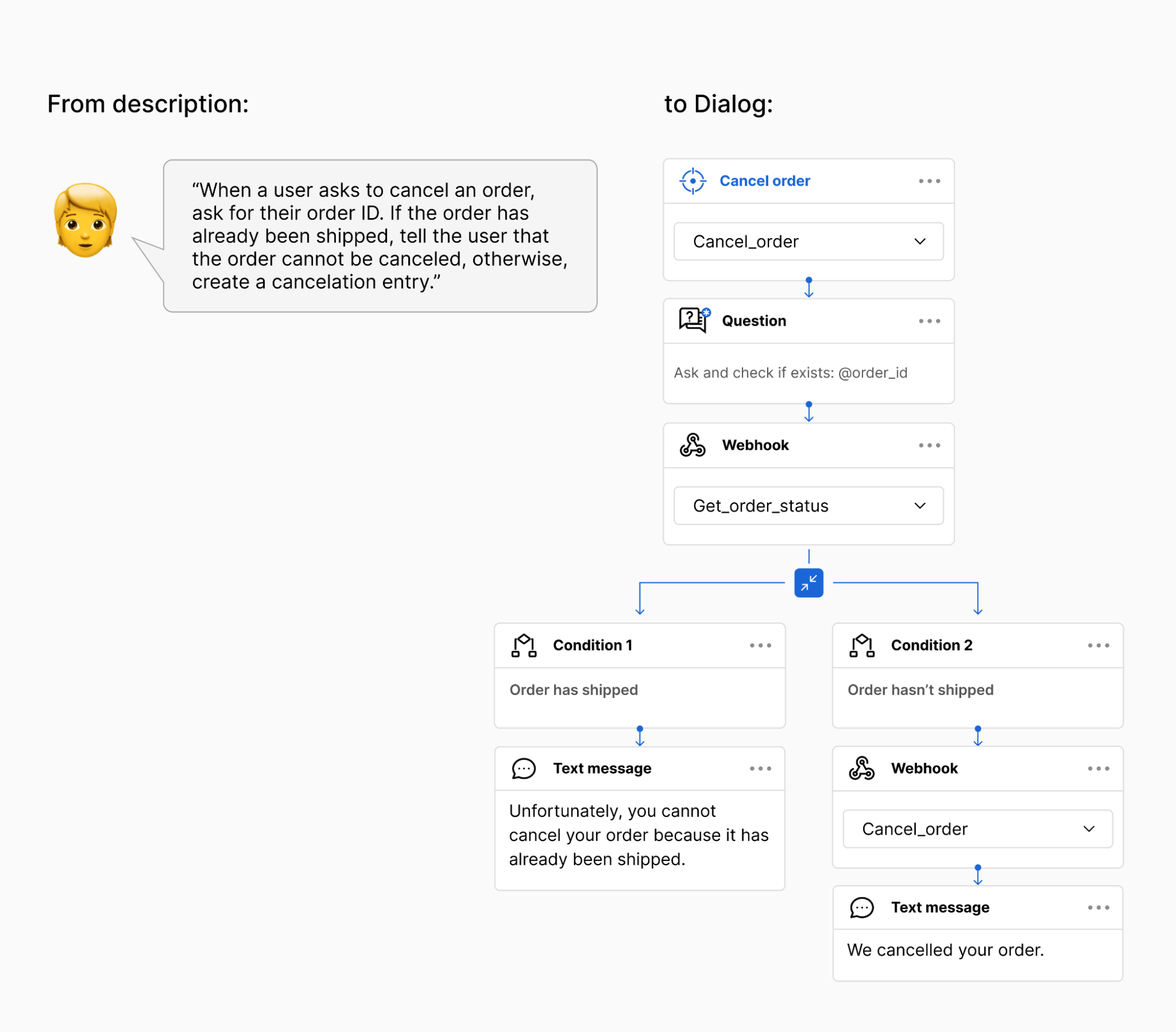

When used correctly, LLMs can automate the creation process by making use of unstructured resources that a company might have, such as knowledge bases and past chat logs. Apart from that, LLMs can be used to minimize the learning curve necessary when building dialog flows. A recent example is the Auto Builder by Môveo.AI, where the dialog flows of the chatbot are built automatically from a simple description of the business process. In the scenario of “returning an order”, a simple description, like the one stated above, would suffice for the dialog flow to be generated automatically by making use of an LLM in the background.

LLMs can assist as a fallback when the chatbot cannot respond

LLMs can assist as a fallback when the chatbot cannot respond

Task-oriented AI chatbots can be incredibly effective in automating business processes and delivering tailored responses to users. However, their utility is often constrained by the need for predetermined answers, as building dialog flows can be a costly and time-consuming process. Additionally, these chatbots may lack the conversational fluency of larger language models (LLMs) and can seem robotic when faced with unexpected questions beyond their training data.

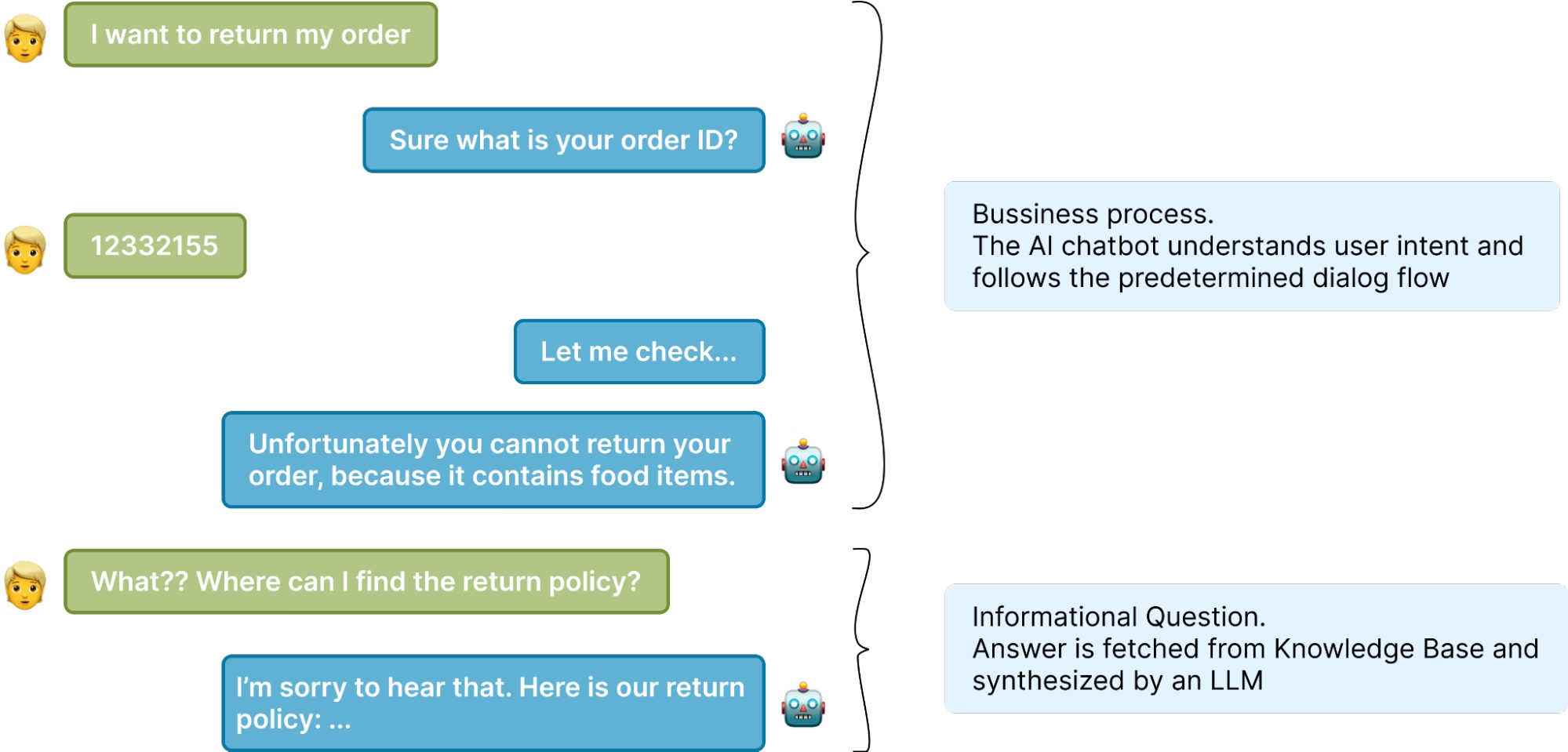

To address these limitations, a combination of task-oriented chatbots and LLMs would be an ideal approach to capturing a broad range of user queries related to both business processes and general FAQs about the company. By leveraging the strengths of both approaches, businesses can build powerful chatbots that provide personalized, contextually appropriate responses, while also offering a higher degree of conversational fluency and adaptability. This approach can help businesses automate processes more effectively and provide more comprehensive and intuitive support to their customers.

All questions related to business processes would be incorporated as dialog flows into the AI chatbot because they need to be explicitly defined and mapped out. On the other hand, questions related to general business information may exist in unstructured data sources such as internal documents, knowledge bases, and chat logs. These sources contain a wealth of information that can be used to supplement the chatbot’s knowledge base and help it respond to user queries more effectively. A recent example of a chatbot that is able to utilize a company’s knowledge base is Fin by Intercom. Fin is able to answer a variety of informational questions (FAQs) related to a company’s knowledge base by using GPT-4 in the background, while transferring more complex questions related to business processes to a live agent.

LLMs can assist human agents

LLMs can assist human agents

As stated above, current LLMs have not reached human intelligence and will not replace human labor. Hence, some of the questions from the end users will not be contained in the chatbot and a human agent will have to take over. However, the chatbot’s job might not end there! It can still generate possible answers to the human agent such that it can boost his/her productivity and efficiency. Even if the generated response might not be 100% factually correct, the human agent can build on it and save precious time.

Apart from generating recommended responses to human agents, LLMs can be used for various other micro-tasks that might be valuable, such as paraphrasing a human agent’s answer to ensure grammatical/syntactical correctness or summarizing a long conversation or even automatically tagging a conversation depending on its topic.

Conclusion

ChatGPT and LLMs have changed the rules of the game. They have opened up new frontiers in AI and transformed the way we interact with language and information systems. These technologies have, to a degree, already reshuffled the cards of conversational AI to companies who are now racing to harness this power for business purposes. However, when used as a standalone system, LLMs provide minimal value to product teams that try to implement solutions for their customer service needs. LLMs truly shine when integrated into an ecosystem, as they can be invaluable assets in the development of advanced language-related applications that revolutionize customer experiences and solve critical business problems.